The relationship between linguistics and artificial intelligence is still controversial. The relevance of this paper lies in the interpretation of technological progress as promising for the field of linguistics, since the synergy between artificial intelligence and linguistics can lead to a rethinking of linguistic paradigms. This paper is methodologically based on a detailed study of the related literature on the recent history of these two fields. As a result, the paper offers a detailed analysis of the related scientific literature and revises the relationship between linguistics and artificial intelligence. Artificial intelligence is seen as a branch of human science, and models have emerged in the intermediate period between the two fields, which were not always understood, but acted as buffers against the interaction of one field with the other; if artificial intelligence is a branch of human science, then linguistics can also adopt this heritage, which may lead to a rethinking of the paradigms of linguistics and challenge the opposition between the exact and experimental sciences. The evolution of the ways of thinking about automatic language processing from the cognitive science point of view of artificial intelligence is traced, and different types of knowledge considered in linguistic computing are discussed. The importance of language both in human-machine communication and in the development of reasoning and intelligence is emphasised. Ideas that can overcome the internal contradiction of artificial intelligence are put forward. The practical significance of the paper is that it highlights how, with the help of automatic language processing, humanity has gradually realised the need to use technological knowledge to understand language. This combination opens up not only knowledge about the language itself, but also general knowledge about the world, about the speaker’s culture, about the communication situation, and about the practice of human relations. The conclusions of the study point to the great potential of interaction between linguistics and artificial intelligence for the further development of both fields.

Copyright © 2024 Ivan Bakhov, Nataliia Ishchuk, Iryna Hrachova, Liliana Dzhydzhora and Iryna Strashko. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

rtificial intelligence is influencing the development of contemporary philology, contributing to the automation of linguistic research and improving the quality of its results. The study of the presented topic is relevant in terms of comprehensive scientific progress, as the use of various automated tools in linguistics allows for faster text analysis, categorization of language units, and identification of linguistic patterns. Wang et al. [1] postulate that at the beginning of their emergence, computers were mostly used in industry and the military, and sponsors of large projects, in particular the United States, directed them to decrypt intelligence documents. Since the 1950s, numerous optimistic studies in linguistics have been published. The main focus was on the study of dictionaries, and translation was seen as a substitution of words with possible further grammatical transformation, without going into deep linguistic aspects. The brief historical overview above highlights the relevance of the topic of artificial intelligence research in linguistics. To begin with, it is worth noting that artificial intelligence has a significant impact on the development of modern philology, contributing to the automation of linguistic research and improving the quality of its results. The use of various automated tools in linguistic research allows to speed up the processes of text analysis, categorisation of language units and detection of language patterns [2]. When artificial intelligence systems were initially studied, linguists believed that automatic translation cost twice as much and produced less efficient results than human translation. However, over time, new concepts and hypotheses were put forward that opened up new opportunities for the development of artificial intelligence in linguistics [3]. Scientists have begun to consider the possibility of simulating any aspect of human intelligence using computer programs.

Previous studies have focused on a fundamental problem in the representation and use of human knowledge. Scientists have tried to prove that contextual and encyclopedic knowledge is necessary for successful information processing, which has introduced new aspects to the study of artificial intelligence systems in linguistics [4]. Thus, this issue is relevant and important for the further development of modern technologies in linguistics and philology.

In this aspect, Schmitt Peter emphasised that for successful information processing it is necessary to have contextual and encyclopaedic knowledge, which introduced a fundamental problem in the representation and use of human knowledge [5]. Although cognitive aspects related to the concept of knowledge began to emerge, scientists, without paying attention to the processes of human information processing, concluded that these aspects were difficult to take into account.

In the initial study of artificial intelligence systems, it was believed that automatic translation was less effective than human translation. The advancement of fundamental ideas for creating artificial intelligence made it possible to hypothesise that any aspect of human intelligence could be simulated by computer programs [6]. This concept played an important role in the development of cognitivism and opened up new perspectives in psychology, linguistics, computer science, and philosophy.

The first concept of communication emerged from information theory. According to this point of view, the speaker has a message in his head that he wants to convey, and accordingly, there are rules for encoding this message. Following these rules allows you to create an expression aimed at encoding the meaning of this message [7]. The listener uses a decoding process that allows them to identify the sounds used, syntactic structures, and semantic relationships and combines all these elements to reconstruct the meaning of the message they understand. This model is based on aspects of language communication, such as the fact that communication is considered successful when the recognised message is identical to the original message (and, accordingly, unsuccessful when the two messages differ) [8]. Language was seen as a bridge that conveys private ideas through public sounds exchanged between interlocutors.

In the context of the above, the purpose of the article is to study the impact of artificial intelligence on the development of modern philology, in particular in the automation of linguistic research and improvement of the quality of its results. Consideration of the stages of development of the use of artificial intelligence in linguistics and discussion of the initial concepts of communication will prove their role in understanding language. Modern approaches to the use of artificial intelligence in linguistic research and their impact on research practice determine the course of modern linguistic theories and grammars, their advantages and disadvantages, as well as the search for ways to combine different approaches to create a completer and more accurate model of language. To achieve this goal, the following tasks have been identified: to analyse various branches of linguistics, review and compare different grammatical theories, and study the impact of these theories on the development of artificial intelligence and machine learning. In addition, to consider the possibilities of using modern technologies to develop new methods of language analysis and the development of linguistics in general.

The study involved the use of several methods of scientific knowledge, such as literature analysis, content analysis, study of theoretical concepts, personal experience, and expert opinions. First, the author analyzed national and international publications on linguistics and artificial intelligence, as well as his own research. Then, analytical and interpretive approaches were used to solve the tasks.

No experiments, surveys, or other empirical methods were used in this study. Still, it involved an analysis of publications by leading scholars in the field, as well as a comparison of approaches to the study of linguistics using artificial intelligence in different countries.

The authors of the article, who have experience in the field of linguistics and artificial intelligence, as well as other researchers whose publications were analysed, participated in the study. To conduct the study, the authors used a variety of materials, including the results of the literature analysis, their own research, articles by leading scholars, theories in the field of philology and linguistics, as well as proposals for further research directions.

Consequently, the research methodology was carefully chosen to obtain objective and well-known data in the field of linguistics and artificial intelligence.

Thus, this perception assumes that the communicative intention arises from the literal meaning of the discourse and can be recognised by means of various grammars and language conventions. Communication is considered possible because the communicative intention is encoded in the message according to conventions [9]. In the initial theories of communication, language was seen as an object with a coherent internal structure that can be studied independently of its use [10]. However, various examples demonstrate that more than just language is needed to determine the true intention that the speaker wants to express. As different comprehension mechanisms are triggered during production, time constraints and comprehension speeds mean that the underlying architecture is consistent. In computer implementations, comprehension has been viewed as a sequential process of converting one representational language to another. This is in line with early models of natural language processing, where it was assumed that linguistic sentences reflect real-world facts.

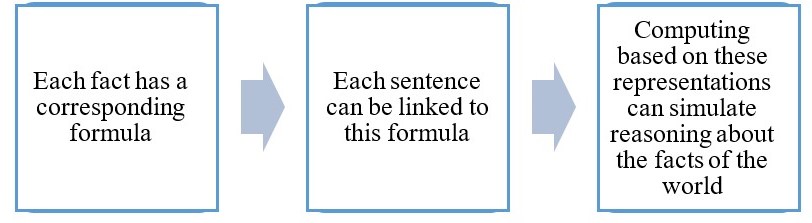

In addition, the scientists assumed that it was possible to create a formal representation system in which (Figure 1);

The operations performed on the structures of representations were justified through the correspondence between these representations and the real world, not just language. [11]. It should be noted that this model was actively discussed by philosophers of language long before the emergence of artificial intelligence [12]. However, the main difference is that most artificial intelligence systems do not have the ability to interact with the real world without the help of a programmer. These systems are based on symbolic systems and require human interpretation to compensate for the lack of perception and action. However, the question is whether these processes are not necessary prerequisites for understanding meaning.

In early language processing systems, it was believed that only a few words and a limited number of syntactic rules were sufficient to perform certain tasks with language, such as answering questions. These systems did not address complex language problems and worked only in limited domains. To make them work, you had to create a lexicon of keywords, write an analyser to filter them, and configure the program to perform the appropriate actions. The most famous of these systems include BASEBALL, STUDENT, and ELIZA. These programs were limited to words and had a very basic syntax [13]. Early language processing systems illustrated the keyword technique but had their limitations. Problems arose when attempts were made to expand the scope of the application because it was difficult to create a complete list of the necessary keywords. There were also situations where a keyword could have multiple interpretations or was missing. In this case, more sophisticated methods had to be considered.

Chomsky developed the theory of formal and transformational grammar, which has greatly influenced linguistic research. This theory, which was aimed at formalising the linguistic competence of speakers, generated only syntactic procedures, but remained difficult to use in the field of artificial intelligence: for example, a sentence with 17 words can have 572 syntactic interpretations. To solve these problems, the theory has evolved into different forms, mainly through the role of the lexicon (extended standard theory, trace theory, ordering, and linking) [14]. Logical grammars and generalised syntactic grammars are the most interesting extensions. The former allow for the direct construction of surface structures, while the latter focus more on semantic aspects.

Chain grammar, similar to formal, non-contextual grammar, allowed more flexibility for various practical tasks, in particular for expressing the relative order of sentence components. This grammar became the basis for one of the first automatic English language analysers. The process of development towards semantics was carried out with case grammars, which took into account the importance of identifying the type of relationship between a verb and its complements. These grammars had two main advantages for artificial intelligence: a model of deep sentence structure with a focus on semantics and a semantic analysis engine that could exploit syntactic constraints. These grammars allowed processing even non-normalised sentences and selecting the optimal system for specific tasks [15].

Halliday’s systemic grammars focused on the functional organisation of language and the relationships between textual form and context, not treating language as an isolated formal system, unlike formal grammar. They described sentences using sets of features for further use by other processes [16]. These grammars helped to take into account contextual aspects and make informed decisions using simple computer processes that interacted with each other. Their use allowed comparing different mechanisms that different languages use to solve the same phenomenon.

It is worth noting the importance of these grammars for artificial intelligence on two levels. Theoretically, they are key in the approaches to semantics and even the pragmatics they enable, particularly in everything related to managing human-computer dialogues. In practice, such a control technique is particularly well understood in the IT field, and its practical implementation on a computer is very simple.

The subsequent emergence of a variety of grammatical theories helped the lexicon of primary importance. They explored the relationships between the different levels of language – lexical, syntactic, and semantic – and generally led to richer and more flexible models than Chomsky’s for automatic processing [17].

Similarly to systemic grammars, functional grammars aimed to emphasise the role of functional and relational aspects in linguistic expressions compared to the categorical concepts of formal grammars. Here, language was seen as a means of social interaction, not just a static description of a set of sentences. Its main goal was communication, not just expression, and priority was given to language use over theoretical competence [18]. Thus, functional grammarians viewed lexical knowledge, knowledge of structures, and grammatical rules as expressions of constraints.

In search of a unique formalism to reconcile these aspects, Kay proposes the concept of functional description, which is based on the idea of covering partial descriptions and leads to the notion of a unification grammar. Bresnan and Kaplan, developing these ideas, develop functional lexical grammars that use the notion of simultaneous equations to semantically interpret the structure built by a non-contextual grammar [19]. This theory aims at modelling the syntactic knowledge required to determine the relations between the predicative semantic aspects necessary for the meaning of a sentence and the choice of words and sentence structures that allow expressing these relations. Functional lexical grammars are one of the most advanced linguistic theories due to the introduction of the concepts of formal semantics. They still find numerous applications in the field of artificial intelligence. The most important differences from the transformational grammars they are derived from include the following (Table 1):

|

Psychological

aspects |

Transformational grammar defines human language

ability (explaining that only humans can learn language). Functional grammar aims to explain how language ability interacts with other mental processes in language comprehension and production. |

|

Structures and

functions |

The notion of structure is primary, and the

grammatical roles of the various components are derived from it. In contrast, in functional grammars, grammatical function is primary and structure secondary. |

|

The role of

vocabulary |

Vocabulary is fundamental for transformationalists.

Each word form can correspond to several separate entries if they fulfil different roles. In functional grammars, syntactic aspects are taken into account in the lexical characteristics of a word. |

However, both grammars explain that linguistic theories cannot explain all aspects of languages, since neither is based on a complete list of facts that can be explained in detail. By focusing on confirmed facts, they then proceed from a model of language that is very limited and introduces only the abstractions necessary to explain observations. This leads to the creation of lexico-grammatical systems that explain the possibilities of combining words with each other. In particular, scientists believe that the lexicon is present at all levels of its model and covers the concepts of “full meaning words” and lexical functions [20]. The described grammars had a great influence on the introduction of artificial intelligence into linguistics. They attached great importance to semantics and created a language based on a detailed lexical list that inseparably combined form and meaning. In this aspect, we can also distinguish semantic grammars, which simplified the processing of sentences by checking the semantic conformity with the grammar, without paying too much attention to syntax. Such grammars are very flexible and easy to implement in limited domains but may be limited in understanding and difficult to apply in other domains.

The scientific contributions of linguistic theorists described above have made it possible to automate natural language processing using artificial intelligence. Automated tools allow linguists to efficiently analyse large amounts of language data. For example, word processing software can automatically detect the frequency of certain words or constructions, perform morphological analysis of text, highlight keywords, perform sentence parsing, etc. Such tools greatly facilitate and speed up research, as well as provide more objective results by eliminating the human factor. They also allow you to analyse language material from different angles and go beyond traditional research methods.

Popular automated tools for linguistic research include Python NLTK (Natural Language Toolkit), R for statistical analysis of language data, as well as specialised programs for analysing texts and corpora, such as AntConc, Corpus Query Processor, WordSmith Tools, etc. [21].

Recent advances in natural language processing using artificial intelligence include the following main characteristics (Table 2):

| Deep learning |

The use of neural networks and deep learning to

solve complex natural language processing tasks, such as machine translation, speech recognition, semantic text analysis, etc. |

|

Improving

translation quality |

Developing new methods to improve the quality of

automatic machine translation, including taking into account context, style, language features, and other aspects of the language. |

|

Development of

speech recognition models |

Creating more accurate and efficient models for

voice, text, and chatbot recognition to improve communication with users and automate interactions. |

|

Analysis of

emotions |

Using natural language processing techniques to

analyse the sentiment of reviews, comments, and social media to identify the moods and reactions of a group of people. |

|

Development of

dialogue systems |

Creating more intelligent dialogue systems that can

interact with people in natural language, understand their needs, and answer questions. |

In general, recent advances in natural language processing using artificial intelligence are aimed at creating more intelligent and efficient systems to facilitate communication between humans and machines.

There are three main characteristics of the latest advances in natural language processing using artificial intelligence (Figure 1):

Reliable parsing is the ability to always provide answers, even in the presence of unexpected data, such as spelling or grammatical errors, omissions, or unknown words. A robust parser should be able to perform a syntactic analysis of every sentence in the text, including sentences whose structure does not fit the grammar [22]. For this purpose, partial parsing is often used: the parser processes each part of the sentence as far as possible and returns a parsing built from these partial elements in case a complete parsing is not possible. This strategy helps to avoid combinatorial explosion, which often occurs with parsing.

One of the major obstacles to the development of a reliable parser is the lack of comprehensive grammar covering all possible forms of language. However, due to the large number of electronic texts that are now available, other approaches have been developed: statistical (and learning) methods have been widely used for automatic language processing. Statistical analysis involves extracting grammatical rules and calculating the frequency of occurrence of words or groups of words [23]. These frequencies indicate certain associative structures and contexts of their use. Statistical methods are very effective in automatic parsing, with recognition accuracy of 95% to 98%. Existing statistical analysers generate all possible parsing options for each sentence of a text, and then select the most likely one based on the frequency of occurrence of word sequences [24]. For example, researchers describe a statistical analyser that builds a grammar based on a corpus of syntactically annotated texts.

The number of large text corpora available in electronic form in different languages is growing. These corpora contain linguistic information and are an important source for linguistic research and applications in the field of automatic language processing. Work on corpora for English lasted for about ten years and contributed to the development of corpus linguistics and the improvement of processing programs [25]. These corpora have also allowed the development of effective evaluation methods. In the case of writing, morpho-syntactic systems can be evaluated quite accurately, but the evaluation of analysers will be more difficult. Therefore, research into protocols for evaluating comprehension systems is currently relevant. It is important to note that these assessments are based on comparisons with marking by experts, so they are not absolute.

An even newer area is text generation, which is sometimes seen as “easier” than analysis, perhaps because of the speed of filling in ready-made answers without requiring a large amount of knowledge. However, in reality, the complexities of this field are also very high, especially when strict constraints such as time and space have to be taken into account [26]. The current trend is to use a single grammar for both creation and analysis, usually bidirectional, and system grammars and unification grammars are used for this purpose.

The first generations of systems focused a lot on syntactic aspects, leaving semantic and pragmatic aspects out of the picture, and were used to test linguistic theories and randomly generate sentences rather than express semantic meaning in contexts [27]. In this respect, the systems of Yngve and Friedman can serve as examples of this category [28]. Only in the systems of the early years is the text considered as a whole, structured on different levels, or communication is seen as an indirect action to achieve goals. Some more recent systems consider modalities other than language during generation (images, drawings, gestures) or seek to model psychological theories [29]. Finally, systems such as GIBET or GEORGETTE are more interlocutor-aware and seek to determine what information to provide at what time, and thus allow for interpretations [30].

Having presented an overview of linguistic theories used in automatic language processing, we will now consider various aspects of language learning from the perspective of artificial intelligence.

The main argument in favour of languages as a means of communication between humans and computers is their flexibility. Instead of treating language flexibility as an obstacle to be solved by adequately limiting the scope of application (which is the position of many modern approaches) [31], it should be addressed directly to guarantee usability. This means that the entire language should be allowed to be used (i.e., all-natural language phenomena should be taken into account, from anaphora to metaphors and metonymies, including ellipses, deictics, etc.) It is almost impossible, except in extremely specific cases, to define restrictive sublanguages that preserve this flexibility.

Modern artificial intelligence systems evaluate text as a holistic form structured at different levels, and communication is interpreted as an indirect action to achieve a communicative goal [32]. More recent systems explore modalities other than language during creation (images, drawings, gestures) or try to model psychological theories [33]. Finally, systems such as GIBET or GEORGETTE take the interlocutor into account better and try to determine what information to provide at what time, so they predict the most likely interpretations [34]. This understanding is not limited to logical evaluation criteria but is the result of cognitive processes that cannot always be described in an algorithmic way. The statement being processed may have several possible interpretations that are constructed in parallel, and the context should prompt the system to the most consistent interpretation, often unique [35]. The cognitive context acts as a set of hypotheses that contribute to the development of the most consistent interpretation. It is a prediction mechanism that differs from traditional analysis, which uses fully automatic processes [36].

For example, in parsing, the principles of “minimal joining” and “deferred closure” are often followed [37]. It may be tempting to rely on general principles to build more abstract language models, but it is important to identify exceptions. A statistical study may reveal general rules, but it will not help in solving individual cases [38]. Such patterns cannot be used as formal rules of analysis. They can be explained as an effect of the competitive organisation of interpretive processes, where simpler interpretations are perceived first.

Of course, rational thinking is also involved in understanding, but only after spontaneous perception of meaning (this division allows us to distinguish between “real” ambiguities caused by communication, which should be resolved by dynamic planning, and artificial ambiguities that go unnoticed without in-depth linguistic study) [39]. This second self-controlled and planned aspect allows, in particular, to cope with all unforeseen events and leads to the learning of new knowledge and new processes in language processing.

A true understanding of the use of automated tools in linguistic research involves a constant confrontation between the obtained statements and prior knowledge and should identify the role of learning in language acquisition [40]. Thus, a cognitive model should take into account the fundamental relationships between language, learning, automatic processes, and controlled processes.

Cognitive linguistics, while not taking into account all the aspects mentioned above, is nevertheless an approach aimed at integrating some of them, and thus represents an extremely useful tool for artificial intelligence.

The issue of cognitive linguistics as a leading one, as one that determines the structure and architecture of artificial intelligence algorithms in advance, is polemical and extremely interesting. Therefore, it is analysed in detail in the discussion.

All language research emphasises the diversity and complexity of the knowledge required for automatic systems to understand language. A significant challenge is to determine the interaction of different knowledge sources, relationships, process models, and computer architectures that most effectively implement this knowledge.

Mendelsohn, Tsvetkov, and Jurafsky, in a related study, investigated initial automatic language processing applications that used sequential architectures with fixed and limited connections between modules [41]. However, the results of the present study show that in some cases the exact order of operations becomes impossible. Therefore, it is important to achieve a certain integration of all knowledge in one module, but this remains a difficult task, as it is necessary to develop rules for interaction between different knowledge. In addition, it is difficult to coordinate modifications, especially in experimental areas. Also, the absence of a single linguistic theory that unites all the necessary knowledge for understanding complicates this process.

In a related study, Torfi emphasise that successful collaboration of different knowledge sources is essential, as it allows knowledge to be stored in the form of declarations and managed independently of the knowledge itself [42]. In the same vein, Chakravarthi and Raja argue that it is also important to understand the architecture of multi-agent systems, taking into account automatic and reflective processes, as well as the structure of complex memory to overcome their separation [43]. However, this example is not unique – modern theories and scientific texts by various authors also contain operations of this type [44]. In this regard, cognitive linguistics should take into account the creative and unpredictable aspects of language, and computer processes should be flexible enough to use them effectively.

In modern language processing, two types of memory can be distinguished: very fast and energy-efficient, but limited in scope, short-term memory, which is constantly updated and subject to interpretation, and more stable memory that stores the results of operations over time (long-term memory) [45]. Short-term memory, in turn, can be divided into a conscious part (working memory) and a subconscious part, which is somewhat larger [46]. In order to be able to reuse structures that are not available in short-term memory, it is necessary to store them in long-term memory. The knowledge stored in long-term memory is associated with linguistic units and processed in working memory, where consistency with the cognitive context is established. The results of this processing are transferred to short-term memory and appear in conscious perception, which triggers the process of automatic acquisition and controlled rational processing.

So, cognitive theory fits organically into the architecture of artificial intelligence and accurately interprets the processes that occur in the human brain during language processing. Therefore, it is worth comparing some similarities between the architecture of computer systems and the architecture of human processing.

Although the issue is not yet very clearly articulated, many artificial intelligence researchers criticise computer-based planning models or purely rational thinking and point to limitations that may be inherent in programs based solely on symbol manipulation [47]. The common metaphor of neural networks points to the function of the brain, where intelligence is seen as the propagation of non-symbolic activations in neural networks. Connectionists, based on research in neurobiology and neuropsychology, are trying to develop effective methods for processing fuzzy or uncertain information [48]. Although it is still a long way from achieving a true brain model, the possibilities of cooperation between connectionist techniques and symbolic systems look quite promising. The connectionist approach, which focuses on interaction, does not distinguish between linguistic representations and other representations. To work with systems of linguistic rules, “localist” connectionist systems need to introduce many intermediary nodes to determine the relationships between the elements of the representation, which can create problems with the efficiency of feedback processing.

Thought is seen as a phenomenon arising from numerous simpler events and leads to modern distributed artificial intelligence methods that attempt to go beyond genetic algorithms or connectionist networks, whether or not they remain within a symbolic structure. A genetic algorithm is a program that uses rules, learning from evolution, mutation, and propagation methods; in such algorithms, simple programs can interact and modify each other to create more efficient programs to solve problems [49]. Distributed artificial intelligence architectures that allow for dynamic control are necessary to take into account all unpredictable aspects of the language [50]. It is also important to provide programs with the ability to self-represent to be able to analyse their own behaviour. Trends (2) and (3), although recent, are basically the same as the original hypothesis, which implies a level of analysis separate from the neurobiological, sociological, and cultural levels.

The above approaches have a common feature – they are all based on formal intelligence, separated from the perception of the real world where this intelligence is developed.

Thus, the first crucial point is that disembodiment deprives machines of the richest sources of information. Reasoning and planning mechanisms that rely only on formal reasoning will face a number of problems due to the limitations of human knowledge. Human knowledge is not entirely accurate because it is impossible to know everything about the important variables in a problem. Additionally, what is known to be true at present may change in the future, making it difficult to be precise. Even if you know everything, limited access to this information makes it difficult to store and retrieve the necessary data.

On the other hand, human memory is not only associative, but also prospective and reflective. It is organized around important things and helps to structure the world in such a way that unnecessary things are not remembered. It’s not a matter of pre-organisation, but a way of directly accessing the information you need. Access to memory allows you to quickly find the necessary elements, guides you, and opens up new opportunities for solving problems. The second aspect of language learning relates to the mechanism of categorization, which is central to structuring the world by building connections between objects and creating classes of similar objects. This categorization is based on physical characteristics, as we use ourselves as the primary reference point. Vital functions are prioritized in the categories presented because they unconsciously reflect the basic needs of people. This has important implications for the learning process, as all knowledge requires a point of reference. It is impossible to imagine intelligence without taking into account the concept of the body as its main physical counterpart.

Therefore, artificial intelligence must have the ability to represent itself and interact with experience, to solve problems through communication and collaboration. This is important because language, learning, and communication play a key role in the use of accumulated knowledge. Thus, a true artificial intelligence should be able to evaluate and modify its programs to achieve optimal results. Overall, cognitive sciences are becoming relevant to solving problems of meaning processing as they interact with various activities and social life.

Symbolic approaches are based on the assumption of mental representations that lead to a strong analogy between the representations that are said to exist in the human mind and those of artificial intelligence, even if the latter are, in some ways, significantly different from human ones. This assumption is crucial in the sense that it implies a level of analysis that is completely separate from the neurobiological level, as well as the sociological and cultural levels.

Staying within this purely symbolic framework, we can highlight the importance of the concept of reflexivity for the scientific understanding of language. From a psychological point of view, an analogy can be drawn between reflexive multi-agent models and basic concepts related to human consciousness. Although these programs cannot mimic the workings of consciousness, it is possible to identify similarities with ideas that arise in metacognition. Despite the differences between human and computer components and their organisation, there are some similarities between the distributed and reflective models and the concept of consciousness, especially with regard to the functional aspects of control. This characteristic of self-representation and self-reference is considered important for intelligence and should be taken into account in artificial intelligence applications.

It is now possible to question the purely symbolic view through connectionist research, which uses effective methods to process fuzzy or uncertain information. Although knowledge about brain functioning is limited, the possibilities of cooperation between connectionist techniques and symbolic systems are quite promising (hybrid systems). With regard to automatic language processing, semantics remains a bottleneck for full-scale implementations, and combining perceptual aspects with learning mechanisms may improve the basis for semantics.

Thus, that automatic language processing and human-machine communication should contribute to the development of basic processes that are necessary for all other types of thinking, just like humans, for whom language is the main tool, providing “cognitive skills”.

Language then becomes the foundation for symbolic thinking, which is important for learning, which is of course necessary for mastering language and symbols, so reflexivity and initiation are central issues. The main goal of the presented study was to understand the basic principles of intelligence in general in order to understand its artificial version. Therefore, the symbiosis between artificial intelligence and cognitive sciences seems to be a natural and promising way to achieve this goal.

In the context of the presented work, we can highlight the prospects for future research. Studying connectionism and finding the optimal combination between connectionist techniques and symbolic systems to create more efficient artificial intelligence systems. Exploring the possibilities of cooperation between perceptual aspects and learning mechanisms to improve the basis for semantics in automatic language processing. Deeper research into reflexivity and initiation in the context of artificial intelligence development to better understand the principles of intelligence in general. Further work on the development of language models and symbolic thinking to improve the learning and communication capabilities of artificial intelligence.