From the perspective of visual communication elements, this paper proposes a digital design composite model based on style migration, trying to explore and innovate art digital design technology. In the image recognition and style migration model, the attention mechanism is added on the basis of ResNet50 network model to enhance the image recognition effect, and on the basis of VGG19 deep convolutional network model and optimization algorithm to realize the image style migration technology and digital redesign. On the basis of the proposed style migration model, a graphic rendering model is further proposed by combining the GrabCut algorithm and the VGG16 neural network, so as to realize the local style migration of patterns. In the application analysis of the artistic digitization of Zhuang brocade lamps, for example, the total percentage of “very approved” and “more approved” in the assessment of the style migration effect is more than 30%, and the style migration effect is better. The average scores of the art design effect evaluation of layers, elements and color richness are 4.02, 4.15 and 4.31, which are all higher than 4 points, and the performance is good. It is also recognized by experts and ordinary respondents in the satisfaction evaluation of art design.

Anation devoid of advanced natural sciences cannot occupy a leading position globally, nor can a nation lacking in vibrant philosophical and social sciences attain such prominence [1]. By integrating art and technology, marrying folk art elements with contemporary technological advancements, and employing digital technological means in the preservation and propagation of folk art, while judiciously incorporating folk art elements into modern products, we can breathe new life into folk art. This endeavor is crucial for the perpetuation of folk skills and the furtherance of folk art development [2-4].

As science and technology advance rapidly, information technology proliferates, and digital technology emerges, fundamentally altering not only the modes of life and production but also fostering novel approaches to visual communication design [5,6]. This digital technology-driven transformation has sparked a revolution in the expression of visual communication art, with its progression fueling the renewal and evolution of this artistic discipline [7,8].

In contemporary times, digital art design has evolved into a realm of heightened professionalism and intricacy, with the traditional art simulation approach encountering a developmental bottleneck in the creative process [9]. Tools rooted in computational thinking and logic, such as Touch Designer, Substance Designer, and Houdini, have undergone rapid development and widespread adoption. This progression has marked a pivotal juncture in digital art creation. Presently, art creation is intricately intertwined with digital technology, whether through reliance on digital creation methodologies or the digital facilitation of artwork dissemination [10]. Consequently, the adoption of a clear, quantifiable, rational, and logical design mindset and approach is of paramount importance.

Amidst the rapid advancement of information technology, digital technology has permeated every facet of human life, serving as a novel lifestyle paradigm and transforming conventional notions of existence. This evolution in digital technology is concurrently reshaping visual communication, transitioning from two-dimensional to three-dimensional perspectives and enabling visual designs to transcend static forms into dynamic expressions, thereby enhancing spatial visual perception. The proliferation of digital media technology has further accelerated the development of 3D technology, affording individuals the ability to immerse themselves in multi-dimensional animations. The digital era exerts a profound influence on visual communication design, augmenting its expressive capabilities and enhancing its aesthetic appeal [11]. Literature [12] highlights the innovative impact of computer technology on graphic design, bestowing patterns with heightened spatial beauty and creativity. Research into the integration of printing and dyeing processes with modern design offers fresh perspectives and expedites the artistic design process. Literature [13] presents a comparative study, affirming the superiority of digital media-based art design over traditional approaches in terms of creative output. Furthermore, Literature [14] explores the integration of artificial intelligence technology with art, endeavoring to embed AI within the creative process and establishing a benchmark for artistic creation, which facilitates authenticity assessment by experts.

In the contemporary digital landscape, art design has transcended the confines of traditional media. Visual programming, a technology with immense potential and a rapid pace of development, has introduced a novel creative vision for art creation in the digital era, ushering in unprecedented transformations. Literature [15] underscores the progression of digital media technology, which is universally acknowledged for enhancing the simplicity and professionalism of film and television media, and offers recommendations for interface optimization. Furthermore, Literature [16] presents a digital image processing approach that facilitates the transformation of digital images into watercolor, ink, and other stylistic renditions through techniques such as sharpening and grayscale adjustments, among others. Notably, this can be achieved on mobile devices, significantly enhancing the efficiency and accessibility of artistic design and creation processes. Lastly, Literature [17] delves into the supportive function of computer-aided technology in architectural design and analysis, elucidating its multifaceted role through practical illustrations encompassing graphic design, advertising photography, and more.

With the advancement of information technology, the integration of digital technology and art design has intensified significantly. Utilizing computer tools, dynamic film and television works, and interactive design, the realm of digital technology’s application has expanded exponentially, bridging the gap between pure artistic creation and commercial design. Consequently, digital art design has emerged as a novel mode of expression and realization within the contemporary design industry, with its research directions and application practice cases proliferating. Literature [18] proposes a design strategy for the visual communication of weakened target images, grounded in the spatio-temporal filtering principle, which surpasses traditional strategies in restoring image performance and integrity. Literature [19] focuses on resolving and optimizing the decompression and compression processes in video retrieval algorithms, introducing a technique for direct i-frame extraction within the compressed domain, thereby enhancing the art design workflow and efficiency. Literature [20] explores the potential of incorporating convolutional neural networks into emotional image recognition learning for students, empirically demonstrating its feasibility while cautioning that technological redundancy and complexity may not always positively impact teaching. Furthermore, it presents actual cases from new media art course design to illustrate this point. Lastly, Literature [21] evaluates the innovative impact of computer-aided technology on art design, which, to a certain extent, simplifies the process and connections involved in art design, markedly improves its efficiency, and propels the development of information technology in art design.

This paper introduces a composite model for digital design tailored to visual communication elements, grounded in the principles of style migration. It establishes a technical foundation for art digital design, encompassing art image recognition, a style migration model, and a graphic rendering model. For art image recognition, the ResNet50 model is selected, optimized, and its algorithm refined. Within the ResNet50 framework, an attention mechanism is integrated to eliminate redundant information during recognition, thereby enhancing accuracy. For the style migration model, the VGG-19 model is employed, and a style image pre-training library is constructed for fine-tuning and style feature extraction. This approach facilitates the capture of intricate image details, enabling seamless style conversion between content and style samples. Subsequently, a graphic rendering model is proposed, leveraging the GrabCut algorithm and VGG16 neural network. This model realizes the digital rendering of wax print patterns by transforming the style of handmade wax prints, allowing for the migration of diverse styles in the resulting images. Furthermore, by adjusting key parameter values, varying degrees of style migration can be achieved. Finally, the application of the proposed artistic digital design technology is evaluated through a case study involving Zhuang brocade lamps and lanterns. The analysis focuses on style migration effectiveness, artistic design impact, and user satisfaction with the design outcomes.

Visual communication elements include text, graphics, color, and composition and other elements. This paper takes it as a guide, combines the development technology of the information age, and proposes a composite model of artistic digital design based on style migration.

ResNet model: ResNet stands for residual network, this series of networks is widely used in the field of target classification as part of the classical neural networks that are the backbone of computer vision tasks, typical networks include ResNet50, ResNet101, etc. The core idea of residual networks i.e., the output is two consecutive convolutional layers and the input is wrapped around to the next layer. The introduction of residual networks and the establishment of a constant mapping significantly simplifies the training process, effectively mitigating the issue of vanishing gradients. Notably, the concept of residuals has gained widespread adoption since its inception. Essentially, residual networks have become an integral component in virtually every deep learning network architecture. In comparison to earlier designs with mere dozens of layers, the ability of residual networks to effortlessly accommodate hundreds, if not thousands, of layers without encountering the rapid disappearance of gradients is remarkable. This advantage stems primarily from the shortcut connections inherent in the residual architecture, which facilitate the flow of gradients throughout the network.

Optimized design of ResNet50 model: The ResNet50 network architecture, comprising 50 layers of convolutional neural networks, demonstrates commendable performance during the training phase of the dataset devised in this study. To tackle the challenge of overfitting that arises from the extensive dataset, we employed a strategy to reduce parameters by incorporating global average pooling, converting the 3D feature tensor into a 1D vector. Additionally, we integrated a Dropout layer to further mitigate overfitting. Experimental results indicate that the optimal training efficacy is attained when the Dropout layer’s parameter is set to 0.5.

Meanwhile, in order to improve the accuracy of image recognition, the attention model is introduced on the basis of ResNet50 model, which is an improved version of Encoder-Decorder model.

From left to right, it can be understood so intuitively. From left to right, think of it as a generic processing model for converting one character (or sentence) to generate another. Assume that the goal of this set of word pairs is \(\left(X,Y\right)\), given the input character \(X\) and the expectation that the encoder-a-decoder framework will be used to generate the target character \(Y\). \(X\) and \(Y\) can be the same textual linguistic object or two different textual linguistic objects. An encoder simply encodes the input sentence \(X\) and transforms the input sentence into an intermediate semantic representation \(C=f(x_{1} ,x_{2} ,x_{3} ,\ldots ,x_{m} )\) through a nonlinear transformation.

For the decoder, the task of the decoder is to generate words \(y_{i}\), \(y_{i} =g(C,y_{1} ,y_{2} ,y_{3} ,\ldots ,y_{i-1} )\) to be generated at the moment \(i\) based on the intermediate semantic representation of the sentence \(X\) and the previously generated history information \(y_{1} ,y_{2} ,y_{3} ,\ldots ,y_{i-1}\).

Style migration pre-training model selection: The style pre-training model used in this experiment is the VGG-19 deep convolutional network. The pre-trained version of this network is used and fine-tuned on the constructed style image pre-training library.VGG19 will focus on the structural elements of the input image in image style feature extraction, which will help to preserve the structure and important elements of the original image when style migration is performed later.In addition, using this model for style feature extraction will effectively learn some detailed features of the image, such as contour features, compositional features and semantic features of text descriptions. For example, contour features, compositional features, and semantic features of text descriptions, which will be helpful for image art style migration. The VGG-19 model used in this study employs a 3*3 convolution kernel with a 2*2 pooling kernel, which further increases the depth of the network compared to the traditional ResNet and AlexNet, and therefore has a stronger feature extraction capability. VGG19 is used for convolutional sensory field computation, as shown in Equation (1): \[\label{GrindEQ__1_} F\left(i\right)=\left(F\left(i+1\right)-1\right)\times \text{Stride+Kernel}_{\text{Size}},\tag{1}\] where \(F(i)\) is the sensory field of layer \(i\), \(Stride\) is the step spacing of layer \(i\), and \(Kernel\_ Size\) is the convolution kernel size. Overall, the VGG model has the following advantages.

One is smaller convolutional kernel.VGG model uses multiple smaller convolutional kernels, which on one hand can realize the function of parameter reduction, and on the other hand performs more nonlinear mapping to increase the expressive ability of the network, which improves the ability to learn the details of the image and get better generation results.

Second, the number of layers is deeper and the feature map is wider. The convolution kernel focuses on expanding the number of channels and pooling focuses on narrowing the width and height, which makes the model architecture become deeper and wider while controlling the scale of computational increase, reducing the use of computational resources, and making the model easier to deploy on mobile.

Optimization of style migration model: The VGG-19 pre-trained network is chosen as the basis for extracting image features, and an adaptive multiple style migration module StyleTranser is proposed in addition.The whole migration process requires two images, a content image as a content sample and a style image as a style sample. The essence of image style migration is to realize the style transformation between the content sample and the style sample through the computer’s calculation to be able to extract its features from the style image. The algorithm of this experiment is optimized on the basis of Gatys’s algorithm, and in the experiment, by setting the number of iterations and optimizing the loss function of the expected setting to minimize the gap between the predicted value and the real value, so as to achieve the purpose of training the style migration network.

Assuming that one feature map obtained through the VGG19 network is \(H\times W\), the information matrix of all feature maps can be represented by \(F\in R^{N\times H\times W}\), where \(N\) is the number of feature maps (number of convolution kernels). Define the content loss function as, as in equation (2): \[\label{GrindEQ__2_} L_{\text{content}} (F,C)=\frac{1}{2} \sum _{i,j}\left(F_{i,j} -C_{i,j} \right)^{2}.\tag{2}\]

The content loss function is mainly used to supervise this style migration network to preserve the compositional elements and key details of the original image as much as possible during the training process. In addition, since different features can be obtained using different convolutional kernels on the same convolutional layer of the VGG19 model. Then the features of the stylized image are represented by the correlation between the different feature maps and the Gram matrix is used as the representation of the stylized features of the image.The Gram matrix \(G\in R^{N\times N}\) is computed as in Equation (3): \[\label{GrindEQ__3_} G_{i,j} =\sum _{k}F_{ik} F_{jk} .\tag{3}\]

For the style image \(S\) and initialization images \(I,G\) and \(A\) represent the style feature information of the style image and the generated resultant image at layer 1 respectively, thus defining the multiple style loss function is defined as, as in Eq. (4): \[\label{GrindEQ__4_} L_{\text{style}} (S,I)=\sum _{l=0}^{L}w_{i} \frac{1}{2N_{l}^{2} M_{l}^{2} } \sum _{i,j}\left(G_{i,j}^{l} -A_{i,j}^{l} \right)^{2}.\tag{4}\]

The final loss function is obtained by weighted association of the content loss function with the style loss function as in Eq. (5): \[\label{GrindEQ__5_} L_{\text{total}} =\gamma _{1} L_{\text{content}} +\gamma _{2} L_{\text{style}},\tag{5}\] where \(\gamma _{1}\) and \(\gamma _{2}\) are weight reconciliation hyperparameters, and the initial value can be (0.5, 0.5).

The method proposed in this chapter for the digitized rendering of local style pattern migration integrates the GrabCut algorithm with the VGG16 neural network, facilitating the achievement of local style pattern migration. The execution of this digital rendering approach can be sequentially outlined into three distinct steps, as follows.

In the first step, the content image is cut using a segmentation algorithm (i.e., the GrabCut algorithm), which is used to determine the region of style migration.

In the second step, the cut image is binarized to generate a mask image, where the content image corresponding to the white region of the mask image will be subjected to style migration, while the content image corresponding to the black region will remain unchanged.

In the third step, the mask matrix is extracted from the mask image and fed into the feature channel of the style migration, thus realizing the local style migration of the pattern.

The simulation of wax pattern style migration is divided into the following 3 steps.

Image segmentation: The foreground pattern of the content image is isolated from its background pattern through the application of the GrabCut image segmentation algorithm, an advanced iteration of the GraphCut method. This algorithm leverages both texture and boundary information, or alternatively, color and contrast cues within the image, enabling the achievement of an enhanced segmentation outcome with minimal user intervention.

Masked images: As the content image only needs to separate out the foreground and background of two regions, the mask image also needs only two regions can be, and after the image segmentation of the resultant image obtained by the background are black, so the results of the image using the formula (6) for binarization: \[\label{GrindEQ__6_} S=T(r)=255,{\rm \; \text{if}\; }r\ne 0.\tag{6}\]

Establishment of VGG16 network model: In this study, we employ the VGG16 network model, renowned for its straightforward structure and moderate computational demands. Specifically, in contrast to earlier network models like LeNet, AlexNet, and GoogleNet, VGG16 offers a relatively uncomplicated feature extraction process. Furthermore, unlike contemporary mainstream models such as ResNet50, which often incorporate numerous layers leading to prolonged computation times and more abstract feature representations, VGG16 strikes a balance, facilitating the reconstruction of content features without undue complexity.

The VGG16 model’s network architecture is meticulously organized into 16 layers, comprising 13 convolutional layers, 3 fully connected layers, and 5 pooling layers. These components are further arranged into 5 convolutional layer groups, with the first two groups consisting of 2 convolutional layers each, and the third, fourth, and fifth groups housing 3 convolutional layers apiece. Notably, the pooling layers, which do not contribute weight coefficients, are excluded from the total layer count of the VGG16 network, focusing instead on the convolutional and fully connected layers that are integral to its structure.

This chapter primarily introduces a graphic rendering approach grounded in style migration, ensuring that the resultant image not only preserves the semantic information inherent in the content image, such as spatial details and shape contours, but also seamlessly integrates the color characteristics and texture attributes of the style image, thereby achieving a harmonious blend of both content and style.

Total loss function: In order to make the image to be generated retain both the content of the content image and the style of the style image, let \(\vec{p}\) be the content image, \(\vec{a}\) be the style image, and \(\vec{x}\) be the random white noise image (i.e., the image to be generated). The VGG16 network model is used as a feature extractor to extract the content features and style features, calculate the content loss value, style loss value and total variance loss value, and define the total loss function as follows: \[\label{GrindEQ__7_} L_{\text{total}} (\vec{p},\vec{a},\vec{x})=\alpha L_{\text{content}} (\vec{p},\vec{x})+\beta L_{\text{style}} (\vec{a},\vec{x})+\gamma L_{\text{total}\_{\text{variation}}} (\vec{x}),\tag{7}\] where \(L_{\text{content}}\) denotes the content loss function of the content image, \(L_{\text{style}}\) denotes the style loss function of the style image, \(L_{\text{total}_{\text{variation}}}\) denotes the total variance loss value of the random white noise image, and \(\alpha ,\beta\) and \(\gamma\) denote the weights of the content loss, the style loss, and the total variance loss, respectively, in the style migration process.

A series of images with different degrees of style migration can be generated by varying the values of \(\alpha /\beta\) and \(\gamma\). Meanwhile, the convolutional layer conv5_2 is selected to calculate the content loss, the convolutional layers conv1_1, conv2_1, conv3_1, conv4_1, conv5_1 are selected to calculate the style loss, in which the weight value \(w_{l}\) of each convolutional layer is \(\frac{1}{5}\), and the white noise image is selected to calculate the total variance loss.

Content loss function: Content features are extracted based on VGG16, but not every layer of the network is used. Typically a convolutional layer of the network is used to extract the content features, and the feature maps of that layer are used as content features. The same convolutional layer is used to extract the content features of the white noise map. Let \(\vec{x}\) be the random white noise image (i.e., the image to be generated), which is recoded by each convolutional layer of the VGG16 network, with the number of convolutional kernels in layer 1 being \(N_{1}\), and the size of each feature map being \(M_{1}\). Therefore, the output of each layer can be stored as a matrix \(F_{l} \in R^{N_{l} \times M_{l} }\), and let \(\vec{p}\) be the content image, which is also recoded by each convolutional layer of the VGG16 network. \(P^{l}\) and \(F^{l}\) denote their content features at layer 1, respectively. The content loss function and its derivation can thus be defined as follows: \[\label{GrindEQ__8_} L_{\text{content}} (\vec{p},\vec{x},l)=\frac{1}{2} \sum _{i,j}\left(F_{ij}^{l} -P_{ij}^{l} \right)^{2},\tag{8}\] \[\label{GrindEQ__9_} \frac{\partial L_{content} }{\partial F_{ij}^{l} } =\left\{\begin{array}{c} {\left(F^{l} -P^{l} \right)_{ij} ,{\rm \; If\; }F_{ij}^{l} >0} \\ {0,{\rm }}, \end{array}\right.\tag{9}\] where \(F_{ij}^{l}\) denotes the activation value of the random white noise image at the \(j\)rd place in the \(i\)nd convolutional kernel of the 1st layer of the VGG16 network, and \(P_{ij}^{l}\) denotes the activation value of the content image at the \(j\)th place in the \(i\)th convolutional kernel of the 1st layer of the VGG16 network.

Style loss function: The local style migration of the image is controlled by the mask information of the mask map, and the spatial bootstrap feature map is introduced to control the region of style migration. Definition \(T_{r}^{l}\) is the bootstrap channel of the layer 1 feature matrix, which is the feature map of each layer in the VGG16 network multiplied by \(R\) bootstrap channels, i.e., the mask information of the mask map is multiplied by the feature information of the style map on an element-by-element basis, as shown below: \[\label{GrindEQ__10_} F_{r}^{l} (x)=T_{r}^{l} \odot F^{l} (x).\tag{10}\]

The image style is defined as the correlation coefficient of the activation terms between the individual channels of a particular layer of the VGG16 network, and the Gram matrix is used to measure the similarity of the output results of each layer of the VGG16. The bootstrapped Gram matrix with mask information is obtained by computing the inner product of each channel \(F_{r}^{l}\), denoted as \(G_{r}^{l} (x)\), as follows: \[\label{GrindEQ__11_} G_{rij}^{l} (x)=\sum _{k}F_{rik}^{l} (x)^{T} F_{rjk}^{l} (x) ,\tag{11}\] Where \(G_{rij}^{l} \in R^{N_{l} \times M_{l} } ,G_{rij}^{l}\) denotes the product of the random white noise image in the output activation term of the \(i\)nd convolutional kernel and the \(j\)rd convolutional kernel in layer 1 of the VGG16 network.

An image similar to the stylized image is generated by decreasing the mean square distance between the bootstrap Gram matrix of the stylized image and the random white noise image. Let \(\vec{a}\) and \(\vec{x}\) denote the stylized image and the random white noise image, respectively, and \(A^{l}\) and \(G^{l}\) are their bootstrap Gram matrices at layer 1, respectively. From this, the stylized loss function for layer 1 can be defined as: \[\label{GrindEQ__12_} E_{rl} =\frac{1}{4N_{l}^{2} M_{l}^{2} } \sum _{i,j}\left(G_{rij}^{l} -A_{rij}^{l} \right)^{2} .\tag{12}\] Based on the weights of different sets of convolutional layers the overall style loss function can be defined as: \[\label{GrindEQ__13_} L_{\text{style}} (\vec{a},\vec{x},l)=\sum _{l=0}^{L}w_{l} E_{rl} ,\tag{13}\] where \(w_{l}\) represents the weight of each layer of style loss in VGG16.

Total variation loss function: TV is a commonly used variate, and its role in the image style migration process is to maintain the smoothness of the image and eliminate the artifacts that may be brought about by image restoration. Let \(\vec{x}\) denote a random white noise image, and \(x_{i,j}^{l}\) the \(j\)th element of the \(i\)th row of the feature map of the image to be generated in layer 1 of the VGG16 network. From this, the total variational loss function can be defined as: \[\begin{aligned} \label{GrindEQ__14_} L_{\text{total}_{\text{variation}}} (\vec{x},l) =\sum _{i,j}\left(\left(x_{i,j+1}^{l} -x_{i,j}^{l} \right)^{2} +\left(x_{i+1,j}^{l} -x_{i,j}^{l} \right)^{2} \right)^{\delta /2}. \end{aligned}\tag{14}\]

That is, find the sum of the squares of the differences of each pixel in the random white noise image from the next pixel in the horizontal direction and the next pixel in the vertical direction, and open the root of \(\delta /2\) on this basis.

Building upon the foundation of the art digital design technology previously introduced, this chapter focuses on Zhuang brocade lamps, which incorporate the novel art digital design methodology presented herein. Through an examination of the integration of visual communication elements with this art digital design approach, we evaluate the effectiveness and impact of the technology on the lamps, offering insights into its application potential.

The image style migration algorithm of this paper is done in python 3.6, which is used in 8G memory, NVIDIA GeForce GTX 960. The software environment is Windows 7 operating system, TensorFlow-gpu 1.14.0, Jupyder Notebook 6.0.22. In the experiment, the image of the result was changed by setting different iterations, different style weights, content weights and transformation decentralization.

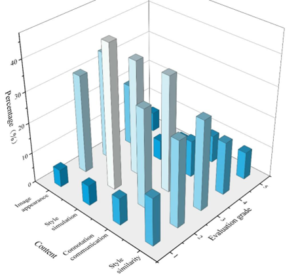

This section delves into the assessment of the style migration effect in Zhuang brocade lamps and lanterns, engaging both Zhuang respondents and non-Zhuang respondents to evaluate the lamps from four perspectives: image appearance ornamentation, style simulation effect, manifestation of Zhuang cultural connotations, and style similarity. The assessment outcomes are presented in Figure 1, where the Y-axis represents a 1-5 scale of recognition, ranging from “very recognized” to “very unrecognized.” As illustrated in the figure, a notable 53.34% of respondents deemed the style simulation effect as “very recognized” or “more recognized,” exceeding the 50% threshold, indicating a satisfactory simulation across various styles. Similarly, the combined percentage of “highly recognized” and “more recognized” for image appearance, cultural connotation, and style similarity exceeds 30%, suggesting that the design partially aligns with respondents’ aesthetic preferences.However, despite these positive findings, there is a notable room for improvement. The cumulative percentage of “relatively unrecognized” and “very unrecognized” ratings across image appearance, connotation, and style similarity exceeds 20%, pointing to opportunities to refine the style migration process and deepen the cultural resonance. Specifically, the degree of cultural connotation conveyed through the design appears to be relatively insufficient, emphasizing the need for further enhancements to enrich the communicative aspect of the style migration effect.

By harnessing the power of deep learning technology for style migration, we have surpassed the traditional constraints imposed by material limitations in traditional art design. This approach significantly diversifies the application of style migration within the realm of digital art design, ushering in a new era of creative possibilities.

This section presents an evaluation of the overall artistic digital design effect of the Zhuangjin lamps and lanterns, with the results concisely outlined in Table 1. The evaluation scores range from 1 (poor) to 5 (excellent), with incremental steps marking progressive improvement. Analysis of individual indices reveals that the average score for color richness stands at 4.03, exceeding the threshold of 4, signifying an exemplary performance in color design. The average scores for level richness and element richness are 3.74 and 3.76, respectively, while those for randomness, color saturation, and color brightness are 3.74, 3.5, and 3.76, respectively. All these scores lie within the range of (3, 4) and are close to 4, suggesting a generally satisfactory performance with ample potential for further enhancement.

| Indicator | Rating score(%) | Average score | Standard deviation | ||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |||

| Richness of the layers | 8.75% | 10.50% | 18.56% | 42.32% | 19.87% | 3.54 | 0.766 |

| Richness of element | 2.71% | 9.80% | 18.50% | 50.73% | 18.26% | 3.72 | 0.597 |

| Randomness | 2.68% | 7.65% | 19.68% | 53.17% | 16.82% | 3.74 | 0.611 |

| Richness of color | 3.84% | 5.83% | 6.54% | 50.85% | 32.94% | 4.03 | 0.515 |

| Saturation of colors | 5.20% | 12.71% | 21.43% | 47.84% | 12.82% | 3.50 | 0.694 |

| Brightness of colors | 2.60% | 8.64% | 18.50% | 50.64% | 19.62% | 3.76 | 0.746 |

| Evaluation index | Expert number | Total score | Average score | Coefficient of ratio | Total coefficient of ratio | Total average score | |

| Content design | Breadth | 8 | 56.16 | 7.02 | 0.77 | 0.8 | 82.22 |

| Depth | 58.4 | 7.3 | 0.8 | ||||

| Correlation degree | 53.6 | 6.7 | 0.83 | ||||

| Presentation design | Grace | 52.8 | 6.6 | 0.8 | 0.81 | ||

| Proximity | 45.6 | 5.7 | 0.8 | ||||

| Coherence | 60.8 | 7.6 | 0.83 | ||||

| Factor design | Colour | 58.24 | 7.28 | 0.88 | 0.86 | ||

| Character | 54.8 | 6.85 | 0.83 | ||||

| Patterning | 55.6 | 7.05 | 0.87 | ||||

| Architecture design | Structural rendering | 56.16 | 7.02 | 0.79 | 0.807 | ||

| Knowledge organization | 58.4 | 7.3 | 0.8 | ||||

| Layout structure | 47.2 | 5.9 | 0.83 |

| Evaluation dimension | Mean | Standard deviation | Evaluation factor | Mean | Standard deviation |

|---|---|---|---|---|---|

| Content design | 4.225 | 0.713 | Clear structure | 4.21 | 0.843 |

| Consistency of formal content | 4.315 | 0.723 | |||

| Strong logic | 4.15 | 0.674 | |||

| Color design | 4.2 | 0.702 | Color highlights | 4.172 | 0.817 |

| Rationality of color collocation | 4.21 | 0.705 | |||

| Color contrast | 4.22 | 0.784 | |||

| Graphic design | 4.39 | 0.738 | Novelty of the presentation form | 4.54 | 0.626 |

| Design style consistency | 4.31 | 0.896 | |||

| Clear, beautiful design | 4.32 | 0.792 | |||

| Emotional design | 4.363 | 0.689 | Deepen cultural impressions | 4.35 | 0.629 |

| Deepen cultural understanding | 4.36 | 0.659 | |||

| Improve cultural interest | 4.38 | 0.779 |

To ascertain the practical implications of applying the artistic digital design technology introduced in this paper to the design of Zhuang brocade lamps and lanterns, a satisfaction feedback survey is conducted in this section.

Expert satisfaction evaluation is mainly from the content and form of the design of the Zhuangjin lamps and lanterns in two dimensions, the entire expert satisfaction evaluation scale has a total score of 100 points, each dimension is set up four evaluation levels, excellent, good, medium and poor, corresponding to 4, 3, 2, 1 four points in turn, the statistical results are shown in Table 2. According to the evaluation results in the table, it can be learned that the average total score of each evaluation index is 82.22, and the total score rate of the four evaluation indexes of content design, presentation design, element design and structural design are 0.8, 0.81, 0.86 and 0.807 respectively, which are higher than 0.8, which indicates that the art digital design technology proposed in this paper is fruitful in practical application. Among them, the design evaluation of color and graphic visual communication elements is higher, with an average score of 7.28 and 7.05, indicating that the visual communication design principles are better followed in the practice of art digital design. The evaluation indexes of richness, proximity and consistency of content presentation also reach more than 0.8, indicating that the selection and presentation of content and form of the technology proposed in this paper are reasonable and effective in the design of art digital practice.

This section presents the respondents’ evaluation of their satisfaction with the experience of Zhuangjin lamps using the art digital design technology in this paper. The evaluation dimensions are subdivided into four dimensions: knowledge content, graphic design, color design and emotional experience. The scale score ranges from 1 to 5 corresponding to five attitudinal tendencies of “strongly disagree, disagree, generally, agree, and strongly agree”, as shown in Table 3. The size of the evaluation mean can directly reflect the degree of satisfaction of the respondents. The content design, color design, graphic design and emotional design dimensions are 4.225, 4.2, 4.39, 4.363, and the mean value is greater than 4.2, which means that the respondents are more satisfied with the design of Zhuangjin lamps. In the content design dimension, the standard deviation of the structural clarity factor is 0.843, indicating that respondents’ satisfaction with the item is unstable and needs to be further optimized and improved. The mean value of each evaluation factor in the dimension of emotional design and graphic design is not less than 4.2, while in the dimension of color design and graphic design from the perspective of visual communication elements, the standard deviation of the factor of color highlighting and design style consistency is 0.817 and 0.896 respectively, which indicates that there is a more obvious polarization of satisfaction evaluation of respondents in the two factors, and that the Zhuangjin lamps and lanterns are more satisfied in the design of color highlighting and overall design style consistency. The highlighting of the design color and the unity of the overall design style can be further optimized. Overall, the average value of each evaluation dimension and factor in the experience satisfaction evaluation of ordinary respondents is more than 4, and the design of the Zhuangjin lamps combined with the technology of this paper is more in line with the aesthetics of the subjects.

This paper initiates its inquiry by focusing on visual communication elements and subsequently proposes an art digital composite model grounded in style migration. Utilizing the Zhuangjin lamps as a case study, the application effect of the art digital design technology introduced in this paper is meticulously analyzed. The key findings of this investigation are as follows:

Regarding the evaluation of the style migration effect, the cumulative percentage of “very recognized” and “more recognized” ratings pertaining to style simulation, image appearance, connotation, and style similarity surpasses 30%. Nevertheless, the combined percentage of “less recognized” and “very unrecognized” ratings for aspects beyond style simulation exceeds 20%. While the design of the Zhuangjin lamps and lanterns partially fulfills the aesthetic preferences of the respondents, there remains ample scope for enhancement, particularly in terms of the depth and richness of the conveyed connotations.

In assessing the artistic design effect, the evaluation levels for level richness, element richness, and color richness all exceed 4 points, with average scores of 4.02, 4.15, and 4.31 respectively. Conversely, the average scores for randomness, color saturation, and color brightness fall within the range of 3 to 4, specifically 3.877, 3.86, and 3.93 respectively. While the design of the Zhuang Jin lamps and lanterns exhibits commendable performance in level, element, and color design, there remains room for improvement in terms of color design and other aspects. The performance in level and element design is satisfactory, yet optimizations and enhancements are necessary in color design and related domains.

(3In the expert module dedicated to assessing satisfaction with art design, the average total score across all evaluation indices stands at 82.22. Specifically, the individual scores for the four key evaluation indices—content design, presentation design, element design, and structure design—are 0.8, 0.81, 0.86, and 0.807 respectively, all exceeding the threshold of 0.8. This study underscores the successful practical application of digital design technology in the realm of art, indicating its fruitfulness and potential in this field.

Regarding the general respondents’ evaluation of art design satisfaction, the mean scores for the dimensions of content design, color design, graphic design, and emotional design are 4.225, 4.2, 4.39, and 4.363 respectively, all exceeding 4.2. Notably, the standard deviations for factors such as structural clarity, color focus, and design style consistency exceed 0.8, indicating a high level of variation and a polarization phenomenon in satisfaction ratings. While the design of the Zhuangjin lamp, incorporating the technology presented in this paper, aligns well with the aesthetic preferences of the subjects, there remain opportunities for further optimization and enhancement in aspects of structural design, color design, and style design.

Overall, the composite model of art digital design based on segmentation migration proposed in this paper can provide a better technical reference and basis for art digital design, and has achieved good results in practical application.

This research was supported by the Natural Science Fund of Gansu Province in 2022: Research on brand positioning of tourism culture and dissemination of cultural and creative products in Gansu Province (NO. 22JR5RA548).