This paper introduces the virtual tabulation technology based on GIS engine with game engine and augmented reality, LED screen. Through the identification of the target, the virtual three-dimensional model is added to the real scene, and the position and angle of the three-dimensional model can be controlled in real time according to the position angle of the target picture, so as to achieve a better fusion effect between the three-dimensional model and the real scene. Using the calling interface of different scenes and the scene conversion matrix, a multi-dimensional and multi-temporal visualization scene is provided. The application of virtual production technology in the field of digital film and television and the expansion of the game field are explored through structural modeling and correlation analysis. The results show that 2010-2018 entered the heyday of virtual production development, with more than 200 virtual production movies released worldwide. The performance expectation that virtual production technology can influence the development of the film and television industry to a large extent has a path coefficient of 0.941. The immersiveness, interactivity, usefulness, ease of use, entertainment, attitude, and willingness of virtual games are all significantly different at the level of 0.01, which positively affects the player’s attitude. Therefore, this paper successfully explores the development and influencing factors of virtual production in film and television, and also analyzes the expansion of its application in the field of digital film and television.

he new production paradigm of modern “virtual production” technology has had an important impact on the visual modeling narrative of film and television [1-2]. In the social context of the “post-truth era”, virtual production constructs a “visual pleasure” modeling narrative from two aspects: spatial modeling and character modeling, based on “visual presence” and “perceived authenticity” [3]. In the context of consumerism, based on “visual immersion” and “visual appeal”, virtual production constructs a “visual spectacle” narrative from two aspects: conceptual resonance and psychological interaction[4-6]. Virtual production optimizes and innovates the visual modeling narrative of the film in terms of concept, method, and form, so that the audience can obtain unique visual pleasure, aesthetic perception, and cultural experience [7-8].

Traditional film production is more like a linear process. After the script is created, the relevant personnel will be based on the director’s needs for location scouting or set up in the studio, while the actors audition, costumes and makeup, and the style of the film will also be determined by the director and the personnel of different departments [9-10]. After the completion of the sub-production, the director and the camera director will develop the lens, lighting and other more detailed parts, if there is a green screen shooting links, but also need to be completed together with the post-production special effects department [11-13]. The characteristics of virtual production technology is that it breaks this linear production process. This feature can be reflected from team formation, pre-planning to on-site shooting and post-production.

At present, virtual production technology is increasingly being applied to the process of domestic movie production[14]. The film and television industry watches the development of virtual production, and many directors show their willingness to embrace the new technology and use it as a new tool for movie production [15]. Of course, as to whether to use this new tool and how to use it, from the filmmaker’s point of view, the core issues that should be considered are still the project cost of the movie, the production cycle and the expected effect[16-17]. If in a project, the use of virtual production technology can reduce the cost, shorten the cycle and greatly improve the shooting effect, then virtual production will be a good choice [18-19].

In this paper, we firstly introduce in detail the technology related to virtual filmmaking, the core of which utilizes AR augmented reality and GIS engine to integrate the digital visual assets of the game. Through the integration of the engine’s on-site dynamic lighting and hybrid virtual production, and then through the virtual camera and physical camera matching shooting output. The questionnaire method is utilized to understand the definition of factors influencing the application of virtual reality technology in the film and television industry. Secondly, through data collection and scale test, the sample data are tested and analyzed for reliability and validity, exploratory factor analysis, and statistical analysis such as correlation analysis and structural equation modeling, to construct a model of influencing factors for the application of virtual reality technology in the film and television industry, and to sort out the correlation between the influencing factors in the influencing factor model and the application of virtual filming in the film and television industry and the expansion of digital film and television field.

Virtual production is a new technology and process pipeline for film and television production that has emerged in the last few years. MPC defines it as: virtual production combines virtual reality and augmented reality with GIS and game engine technology to enable producers to see scenes unfolding in front of them as if they were being synthesized and filmed on location. To further illustrate, virtual filmmaking is the realization of virtual reality imaging with visual digital assets carried by an image engine for image projection on an array of LED screens.

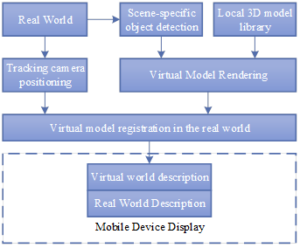

The framework of Augmented Reality system is shown in Fig. 1.AR Augmented Reality starts with the video frames acquired through the camera and ends with the rendered virtual objects displayed on the screen.The AR application is to continuously search for a marker object as a tracking target in the input image frames, and when the target object is tracked, the virtual object will be displayed on the screen at the location of the target object. A complete AR system usually consists of four parts: camera tracking and localization, virtual model rendering, 3D registration, and system display.

Camera tracking and localization is the first step of AR technology, which is used to obtain and set the position and attitude of the camera. This module can obtain real-world information, laying the foundation for the subsequent rendering of 3D models as well as virtualization, and is an extremely important part of realizing AR technology.

The video image captured using the camera lens is composed of multi-frame images, and the image can be understood as a two-dimensional array of data consisting of pixel points in computer data. Calibration technology is through the projection transformation of the coordinate system, to get the corresponding transformation matrix, used to obtain the camera’s internal parameter information, through the calculation of the camera’s orientation and position.

Three levels of system coordinates:

World Coordinate System \(\left(X_{w} ,Y_{w} ,Z_{w} \right)\): Based on the real world coordinate system, which can also be called the global coordinate system, the world coordinate system has no relation to the usual longitude and latitude for locating the physical position in the real world, and it is defined separately to deal with the three-dimensional space of the real world.

Camera coordinate system \(\left(X_{c} ,Y_{c} ,Z_{c} \right)\): take the front projection model, the focus center of the camera camera as the origin, the camera optical axis as the \(Z\) axis of the three-dimensional coordinate system based on the world of the camera in the device, \(\left(X_{o} Y_{c} \right)\) is the camera coordinate system parallel to the physical coordinate system of the image.

Image Coordinate System \((X,Y)\): There are two ways to represent an image, physical coordinate system, and pixel coordinate system. The physical coordinates of an image are a plane right-angle coordinate system, parallel to the camera coordinate system. The pixel coordinate system of an image is a plane right angle in pixels, with its origin at the upper left corner of the image, and for digital images, the row direction and column direction, respectively.

With the above definitions of the various spatial coordinate systems, it is possible to establish the transformation relationship between the real and virtual coordinate systems:

Real World Coordinate System and Camera Coordinate System Conversion

The position of the camera is likely to change at any time, through the use of the world coordinate system can be used to quantify the relative position of the camera with a fixed standard, so as to get the position information of objects in the real world environment relative to the camera. \(X_{F} Y_{\nabla } Z_{\nabla }\) represents the world coordinate system, if \(R\) is used to represent the rotation matrix of the angle between the two coordinate systems, and \(t\) is used to represent the translation vector of the moving distance between the two coordinate systems, then point \(P_{r}\) in the world coordinate system is represented as point \(P_{c}\) on the camera coordinate system: \[\label{GrindEQ__1_} \begin{pmatrix} X_{P_c} \\ Y_{P_c} \\ Z_{P_c} \\ l \end{pmatrix} = \begin{pmatrix} R & t \\ 0 & 1 \end{pmatrix} \begin{pmatrix} X_{P_w} \\ Y_{P_w} \\ Z_{P_w} \\ l \end{pmatrix} = M_{ort} \begin{pmatrix} X_{P_w} \\ Y_{P_w} \\ Z_{P_w} \\ l \end{pmatrix}. \tag{1}\]

Conversion of camera coordinate system and image coordinate system

The camera on the mobile device uses the principle of small hole imaging to convert the camera’s 3D coordinate system into a 2D image coordinate system suitable for mobile device screen display. The camera three-dimensional coordinate system takes the center of the camera of the mobile device as the coordinate origin of the reference point, takes the direction of the camera as the positive direction of the \(Z\) axis, takes the direction perpendicular to the \(Z\) axis and passes through the center of the camera as the positive direction of the \(X\)rd axis, determines the positive direction of the \(Y\) axis according to the right-hand rule through the \(X\) axis and the \(Z\) axis, and can obtain the conversion relationship between the camera 3D space coordinate system point \(Pc(Xc,Yc,Zc)\) and the image physical coordinate system point \(P(X,Y)\) according to the triangle similarity principle: \[\label{GrindEQ__2_} \begin{cases} {X=f\left(X_{c} /Z_{c} \right)}, \\ {Y=f\left(Y_{c} /Z_{c} \right)}, \end{cases} \tag{2}\] where \(f\) denotes the distance of the camera coordinate origin from the plane image, i.e., the focal length.

h5 id=”graphical-rendering-techniques”>2) Graphical rendering techniquesThe virtual model rendering module provides virtual images for the AR system, which is complementary to the real world and is the second step of AR technology, and its main function is to generate relevant virtual graphics. When the system detects a change in the real world scene, it calls up the pre-generated relevant virtual graphics for display. A large number of virtual models matching the surrounding environment are required in the realization of this module. The rendering algorithm uses the real image acquired by the camera as a basis to determine the rendering range, and then calculates the effect of illumination on the objects. Unlike the real world, virtual worlds require the use of a large number of auxiliary light sources during the rendering process in order to achieve special effects, similar to the principle of the sun and lights in the real world.

h5 id=”three-dimensional-registration-techniques”>3) Three-dimensional registration techniquesThe 3D registration module is the core and key of AR technology, responsible for determining the positional relationship between objects or scenes in the real world and the rendered virtual graphics, and realizing the seamless combination of virtual and real scenes. The virtual image is superimposed onto the real scene, and when the camera moves in space, the virtual graphic also changes. Taken from any angle, the relative positions of the virtual graphics and the real scene need to be consistent, following the geometric consistency of the Euclidean theorem. The 3D registration technique is to dynamically track and monitor the objects in the real scene and register the position of the camera. The use of tracking registration technology for object detection, through the use of camera calibration technology to get the detection of the target information and calculate the relationship with the real scene, so as to get the relative position of the camera and the scene, angle information, as a basis for adding information to the virtual scene, to achieve seamless integration of reality.

The above four parts constitute the whole of AR technology, one is indispensable, and the system constantly repeats the process.

Scene construction under the GIS engine mainly relies on remote sensing images, digital elevation models, inclined photography models, etc. to build watershed-level scenes. The scene has a real coordinate system, truly reflects the spatial position, shape and texture information of spatial objects, is capable of three-dimensional spatial analysis and operation, and has the unique ability to manage complex spatial objects and spatial analysis, and is able to map geographic information more accurately and concretely from the real world to the virtual world. The GIS engine is used to build a basin-level visualization scene and add material, animation, light and shadow effects to the model data in the scene, which can restore the engineering scene in high simulation and realize the simulation of engineering operation.

h5 id=”cross-platform-scenario-integration”>1) Cross-platform scenario integrationIn order to support the needs of different production business applications and visualization scenes of different film and television scenes, two engines must be used to support the expression of scenes at different scales. The performance of using 3D geospatial data to shape virtual 3D environments opens up many possibilities for geographic applications. However, the file formats of these data sources are not standardized, and game engines only support a limited number of file formats, which usually require data conversion when applying geospatial data to virtual 3D environments built by game engines. When constructing a network 3D application through the game engine, loading huge static resources in the 3D simulation scene will cause large network data transmission pressure, which will then negatively affect the visualization. Therefore, the platform adopts the GIS engine to provide large-scale scenes of the watershed and the game engine to provide refined scenes of the project. In order to ensure the precise integration of different scale scenes, the scene fast transformation control technology is developed.

h5 id=”determine-the-scene-feature-parameter-vector”>2) Determine the scene feature parameter vectorCorresponding to the geodetic coordinate system of the GIS engine, a coordinate system is added to the game engine, with scene viewpoint coordinates, scene scale, and viewpoint as scene feature vectors.

The initial scene feature parameter vector is \(A\).

Where, \(R_{x}\)-change process \(x\)-direction rotation transformation matrix. \(R_{y}\)-change process \(y\)-direction rotation transformation matrix. \(R_{z}\)-change process \(z\) direction rotation transformation matrix. \(\theta _{x}\)-change process \(x\) direction rotation angle. \(\theta _{y}\)-Change process \(y\) Direction rotation angle. \(\theta _{z}\)-change process \(z\) Direction rotation angle.

h5 id=”solving-the-transformation-matrix”>3) Solving the transformation matrixBy analyzing the projection of different scene feature parameter vectors, the transformation matrix can be solved \(T_{ranM}\). The trigger condition for scene switching is set in advance, and once the condition is satisfied, the transformation matrix is solved. Act on the initial feature vectors to realize the transformation: \[\label{GrindEQ__3_} A=[x,y,z,1]^{T} ,\tag{3}\] where, \(A\)– Initial scene feature parameter vector. \(x\)-Initial scene \(x\)-way coordinates. \(y\)-Initial scene \(y\)-way coordinates. \(z\)-initial scene height.

The target scene feature parameter vector is \(A{'}\) and the expression is: \[\label{GrindEQ__4_} A{'} =\left[x{'} ,y{'} ,z{'} ,1\right]^{T}.\tag{4}\]

h5 id=”introduction-of-transformation-matrices”>4) Introduction of transformation matricesIntroducing the orthogonal matrix \(T_{ranM}\) as the scene transformation matrix, \(T_{ranM}\) can be expressed as: \[\label{GrindEQ__5_} T_{ranM} =TSR .\tag{5}\]

\(T\) is a translation transformation matrix that can be expressed as: \[\label{GrindEQ__6_} T=\left[\begin{array}{cccc} {1} & {0} & {0} & {\text{Translation}.x } \\ {0} & {1} & {0} & {\text{Translation}.y } \\ {0} & {0} & {1} & {\text{Translation}.z } \\ {0} & {0} & {0} & {1} \end{array}\right],\tag{6}\] where, \(\text{Translation}.x\)-Transformation process \(x\)-direction translation distance. \(\text{Translation}.y\)-Transformation process \(y\)-direction translation distance. \(\text{Translation}.z\)-transformation process \(z\)-direction translation distance.

\(S\) is the scaling transformation matrix, which can be expressed as: \[\label{GrindEQ__7_} S=\left[\begin{array}{cccc} {\text{Scale}.x } & {0} & {0} & {0} \\ {0} & {\text{Scale}.y } & {0} & {0} \\ {0} & {0} & {\text{Scale}.z } & {0} \\ {0} & {0} & {0} & {1} \end{array}\right] ,\tag{7}\] where, \(\text{Scale}.x\)-Transformation process \(x\)-direction scaling factor; \(\text{Scale}.y\)-Transformation process \(y\)-direction scaling factor; \(\text{Scale}.z\)-transformation process \(z\)-direction scaling factor.

\(R\) is the rotation transformation matrix, which can be expressed as:

\[\begin{aligned} R=R_{x} R_{y} R_{z}=\left[\begin{array}{cccc} {1} & {0} & {0} & {0} \\ {0} & {\cos \theta _{x} } & {\sin \theta _{x} } & {0} \\ {0} & {-\sin \theta _{x} } & {\cos \theta _{x} } & {0} \\ {0} & {0} & {0} & {1} \end{array}\right]\left[\begin{array}{cccc} {\cos \theta _{y} } & {0} & {-\sin \theta _{y} } & {0} \\ {0} & {1} & {0} & {0} \\ {\sin \theta _{y} } & {0} & {\cos \theta _{y} } & {0} \\ {0} & {0} & {0} & {1} \end{array}\right] \times\left[\begin{array}{cccc} {\cos \theta _{z} } & {\sin \theta _{z} } & {0} & {0} \\ {-\sin \theta _{z} } & {\cos \theta _{z} } & {0} & {0} \\ {0} & {0} & {1} & {0} \\ {0} & {0} & {0} & {1} \end{array}\right]. \end{aligned}\tag{8}\]

h5 id=”implementing-parameter-conversions”>5) Implementing parameter conversionsApplying the transformation matrix \(T_{ranM}\) to the initial scene feature parameter vector can be expressed as: \[\label{GrindEQ__9_} A{'} =T_{ranM} A.\tag{9}\]

The feature parameter vector conversion from the initial scene to the target scene is realized through matrix operation, so as to realize the unification and precise integration of the scene images of GIS engine and game engine.

The digital effects of film and television are the use of digital technology to create virtual images of complex images and images, to enhance the visual impact. Digital special effects are also known as digital special effects, and now the digital effects have become an essential element in the film industry, and the use of digital special effects has led the creators to create a real sense of reality and a variety of future imagination, not limited by time and space, and the ability to display the artistic charm of the film. With the development of science and technology, its expression form and technical means have become more efficient, especially in the digital special effects of contemporary TV and television, it can fully use the digital technology advanced, interactive, more effective, visually the sound painting information to analyze and processing, the film art therefore is constantly upgraded. The use of film and television digital special effects in the film makes the artistic expression of the film almost reached the level of the peak. In the film Jurassic park, the digital effects of the film, “Jurassic park,” have produced a vivid depiction of dinosaurs that have been able to reproduce the most thrilling ship in history. In the film, using digital effects to make films such as science fiction, horror films, war films, sensamous films and other kinds of films, its application research can provide theoretical reference for the development of the film and the cultivation of talents.

This study uses spss17.0 statistical software to analyze the data, including descriptive statistics, reliability and validity analysis. Meanwhile, amos20.0 was used to model the structural equations and examine the relevant strong and weak relations between variables. In the analysis of the structural equation model, the conceptual model was obtained based on the theoretical analysis, and the model of the structural equation was proposed in amos20.0, and the original model was modified according to the results of the amos20.0, which determined the best model of the theory and data, and analyzed the model and the solution release.

The principle of structural equation modeling is to minimize the covariance matrix of the hypothetical model and the theoretical covariance matrix, and when estimating the parameters of the model, if the hypotheses are reasonable, then the covariance matrices of the two should be equal. Therefore, the fitting function \(F\left(S,\Sigma \left(\theta \right)\right)\) is used to represent the degree of proximity of the covariance, there are many kinds of fitting function, the following are the two most commonly used methods.

Maximum Likelihood Estimation: Maximum Likelihood Estimation is the most widely used parameter estimation method, which requires that the variable data must be continuous and in line with the characteristics of the multivariate normal distribution, otherwise the fitting effect will be very poor or even produce erroneous estimation results. The formula is shown in (10): \[\label{GrindEQ__10_} F_{ML} =\log \left|\Sigma \left(\theta \right)\right|+tr\left[S\Sigma ^{-1} \left(\theta \right)\right]-\log \left|S\right|-\left(p+q\right) . \tag{10}\]

Generalized least squares estimation: Maximum likelihood estimation requires that the data variables conform to the characteristics of normal distribution, but it is not possible to apply it to all data. Generalized least squares estimation is not limited by the distribution of the data variables. When the data show normal distribution, the results obtained by generalized least squares estimation are very similar to the maximum likelihood estimation method, and when the data do not have normal distribution, the generalized least squares estimation can still get good results. The principle of this method is the sum of squares of differences and individually weighted with fixed weights when calculating the difference, with the formula as in (11):

\[\label{GrindEQ__11_} F_{GLS} =\frac{1}{2} tr\left(\left\{\left[S-\Sigma \left(\theta \right)\right]W^{-1} \right\}^{2} \right) .\tag{11}\]

Structural equation modeling generally uses \(S^{-1}\) as the weighting matrix, then \(W^{-1} =S^{-1}\), as in Eq. (11), and the results obtained from the estimation are evaluated through Eq: \[ \label{GrindEQ__12_} {F{}_{GLS} } {=} {\frac{1}{2} tr\left(\left\{\left[S-\Sigma \left(\theta \right)\right]S^{-1} \right\}^{2} \right)} = {\frac{1}{2} tr\left\{\left[I-\Sigma \left(\theta \right)S^{-1} \right]^{2} \right\}} . \tag{12}\] \[\label{GrindEQ__13_} R^{2} = 1-\frac{|\hat{\psi }|}{\left|\Sigma _{YY} \right|}. \tag{13}\]

Correlation analysis is a statistical analysis method that verifies whether there is correlation between things or random variables, and explores and analyzes the correlation degree and direction of correlation between variables.

Bivariate correlation analysis is a method of statistical analysis only for the interdependence between two sets of data or two random variables. The correlation coefficient of variable \(X\) and variable \(Y\) is shown in Eq. (14): \[\begin{aligned} \label{GrindEQ__14_} {\rho X,Y} {=} {\frac{cov(X,Y)}{\sigma X\sigma Y} } = {\frac{E((X-\mu X)(Y-\mu Y))}{\sigma X\sigma Y} }={\frac{E(XY)-E(X)E(Y)}{\sqrt{E\left(X^{2} \right)-E^{2} (X)} \sqrt{E\left(Y^{2} \right)-E^{2} (Y)} } } \end{aligned}\tag{14}\] where \(cov\left(X,Y\right)\) is the covariance of \(X\) and \(Y\), and \(\sigma X\sigma Y\) is the product of the standard deviation of \(X,Y\).

After 2009, virtual production entered a heyday of development, the production technology and process became more and more mature, and the use of virtual production special effects in film and television works became an industry practice. All countries are involved in the field of virtual production, and many animation companies have emerged. Mainstream movies have entered the period of virtual production, and the quantity and quality of the works have been greatly improved.The representative works of virtual production movies from 2010 to 2018 are shown in Table 1.From 2010 to 2018, the number of virtual production movies released worldwide reached more than 200, of which 53 had a box office of more than 300 million US dollars. At this stage, the development of core technology related to virtual production has reached a peak, and the application field of virtual production has further expanded, and after the audience has become accustomed to the visual style of virtual production, the artistic creation has put forward higher requirements for visual performance. The progress and openness of the technology enables individual users or small teams to create virtual productions.

| Order | Time | Title | Country |

| 1 | 2010 | Shrek Forever After | The United States |

| 2 | 2010 | How to Train Your Dragon | The United States |

| 3 | 2010 | Megamind | The United States |

| 4 | 2010 | Despicable Me | The United States |

| 5 | 2010 | Toy Story 3 | The United States |

| 6 | 2010 | Tangled | The United States |

| 7 | 2011 | Rio | The United States |

| 8 | 2011 | Kung Fu Panda 2 | The United States |

| 9 | 2011 | Puss in Boots | The United States |

| 10 | 2011 | The Adventures of Tintin | The United States |

| 11 | 2011 | Cars 2 | The United States |

| 12 | 2012 | Ice Age: Continental Drift | The United States |

| 13 | 2012 | Rise of the Guardians | The United States |

| 14 | 2012 | Madagascar 3 | The United States |

| 15 | 2012 | Dr. Seuss’ The Lorax | The United States |

| 16 | 2012 | Hotel Transylvania | The United States |

| 17 | 2012 | Brave | The United States |

| 18 | 2012 | Wreck-It Ralph | The United States |

| 19 | 2013 | The Croods | The United States |

| 20 | 2013 | Despicable Me 2 | The United States |

| 21 | 2013 | Monsters University | The United States |

| 22 | 2013 | Frozen | The United States |

| 23 | 2014 | Rio 2 | The United States |

| 24 | 2014 | How to Train Your Dragon 2 | The United States |

| 25 | 2014 | Penguins of Madagascar | The United States |

| 26 | 2014 | Big Hero 6 | The United States |

| 27 | 2014 | The Lego Movie | The United States |

| 28 | 2015 | Home | The United States |

| 29 | 2015 | Minions | The United States |

| 30 | 2015 | The SpongeBob Movie | The United States |

| 31 | 2015 | Hotel Transylvania 2 | The United States |

| 32 | 2015 | Inside Out | The United States |

| 33 | 2015 | The Good Dinosaur | The United States |

| 34 | 2016 | Ice Age Collision Course | The United States |

| 35 | 2016 | Trolls | The United States |

| 36 | 2016 | The Secret Life of Pets | The United States |

| 37 | 2016 | Sing | The United States |

| 38 | 2016 | Finding Dory | The United States |

| 39 | 2016 | Zootopia | The United States |

| 40 | 2016 | Moana | The United States |

| 41 | 2016 | The Angry Birds Movie | The United States |

| 42 | 2016 | Kung Fu Panda 3 | The United States |

| 43 | 2017 | The Boss Baby | The United States |

| 44 | 2017 | Despicable Me 3 | The United States |

| 45 | 2017 | Coco | The United States |

| 46 | 2017 | Cars 3 | The United States |

| 47 | 2017 | The Lego Batman Movie | The United States |

| 48 | 2018 | The Grinch | The United States |

| 49 | 2018 | Hotel Transylvania 3 | The United States |

| 50 | 2018 | Spider-Man: Into The Spider-Verse | The United States |

| 51 | 2018 | Ralph Breaks the Internet | The United States |

| 52 | 2018 | Incredibles 2 | The United States |

| 53 | 2018 | Peter Rabbit | The United States |

This study begins with a detailed analysis and study of virtual production technology and its application in the film and television industry. The theoretical model of the research on the influence factors of virtual production on the film and television industry is used to construct a test scale of the influence factors of the application of virtual production in the film and television industry. The question design of this research scale starts from five dimensions: performance expectation, effort expectation, community influence, convenience condition, and willingness to use.

V1: The use of virtual production technology can change the inherent mode of film narrative and enhance the interactive narrative development of the film.V2: The application of virtual production technology in scriptwriting can increase the subjective perspective, weaken the montage and thus change the direction of scriptwriting.V3: The use of virtual production technology for camera movement presents a first-person perspective, which enhances the immersive experience of the movie and also improves the director’s ability to grasp the overall situation of the film.V4: Can I use virtual production technology in the film industry to improve my performance? V4: I can learn virtual production technology through offline courses and online classes. v5: I have a good foundation in photography and videography, so it’s easy for me to operate a panoramic camera. v6: I have enough experience in post-production editing, so it’s easy for me to use AE to do post-production on VR videos. v7: My classmates, colleagues, and friends are learning about virtual reality technology and discussing how to create scripts in depth. v8: My classmates, colleagues, and friends are learning about virtual reality technology and discussing how to create scripts in depth. v9: My classmates, colleagues, and friends are learning about virtual reality technology. V8: Experts in the field introduce the current situation and prospects of virtual reality technology in the film and television industry through lectures and conference reports. v9: New media platforms often introduce the latest applications of virtual reality technology in the film and television industry, such as the promotion of movie launches.

The scale was distributed to film and television industry practitioners, and the total number of scales distributed was 250, and the number of recovered scales was 250. The results of descriptive statistics of the subjects are shown in Table 2. The basic information of the subjects counted in this study includes gender, age, education level and the category of time spent watching movies, TV or variety shows every day, and according to the statistics of the 250 valid survey test data, the proportion of respondents who are female is greater, reaching 66.0%. Those aged 25-30 years old were predominant, accounting for 38%. Bachelor’s degree accounted for 48%, followed by graduate degree at 36.00%. Among those who watched movies, TV or variety shows every day, 40.00% watched for 1 hour-2.5 hours, followed by those who watched for 2.5 hours-4 hours a day, accounting for 28.00%, and those who watched for less than 1 hour a day, accounting for 24.00%.

| Topic | Options | Frequency | Ratio |

| Gender | Man | 85 | 34.00% |

| Female | 165 | 66.00% | |

| Age | Under 18 | 6 | 2.40% |

| 18-24 | 95 | 38.00% | |

| 25-30 | 95 | 38.00% | |

| 31-35 | 30 | 12.00% | |

| Over 36 | 24 | 9.60% | |

| Education | Junior middle school and below | 8 | 3.20% |

| High school (or vocational secondary vocational school) | 12 | 4.80% | |

| Junior college | 20 | 8.00% | |

| Undergraduate | 120 | 48.00% | |

| Graduate student | 90 | 36.00% | |

| Watch the film every day | Under 1 hour | 60 | 24.00% |

| 1-2.5 | 100 | 40.00% | |

| 2.5-4 | 70 | 28.00% | |

| 4-6 | 16 | 6.40% | |

| Over 6 | 4 | 1.60% |

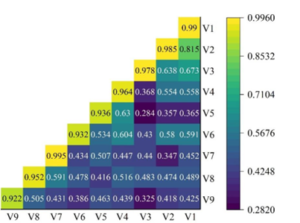

For the method of determining the fit of the hypothesized model by using the values in the fitted covariance matrix as a primary determination of the fit of the hypothesized model. Therefore, the correctness of the fit of the hypothesized model requires a comprehensive judgment of the following indicators. The sample data covariance matrix is shown in Fig. 2, and the results show that the relative covariance value of each indicator is the largest, which is 0.99, 0.985, 0.978, 0.964, 0.936, 0.932, 0.995, 0.952, and 0.922, respectively.The model of the influencing factors of the application of virtual production technology in the film and television industry proposed in this study is basically reasonable.

Assumptions are made about the relationship of each relevant variable in the research model of the influence factors of the application of virtual production technology in the film and television industry, which is used to determine whether each path of the hypothesis can be established, that is to say, to determine whether the two latent variables in the path are determined to exist in the influence relationship, as well as the size and significance of the influence relationship. The results of the hypothesis testing are shown in Table 3. The coefficient of the path of performance expectation on willingness to use is 0.941, which is the first position of the four influencing factors. Performance expectation in this study refers to the fact that virtual production technology can influence the development of the film and television industry to a large extent. The path coefficient of Effort Expectation for Intention to Use is 0.542, which is the secondary position of the four influencing factors. The path coefficient of community influence for intention to use is 0.430, which is the third position among the influencing factors. The path coefficient of convenience conditions for intention to use is 0.132, with the lowest degree of influence. Facilitating conditions in this study refer to the support and assistance that film and television industry practitioners can get in understanding virtual production technology, that is, film and television industry practitioners can support their use of virtual production technology with respect to the existing technological infrastructure, training capabilities, and access to information.

| Hypothesize | Variablerelation | SE | Cr | Standardized path coefficient | Test result |

| H1 | Performance expectations have a positive effect on the willingness of people in the film industry to accept and use virtual production | 0.144 | 0.145 | 0.941 | True |

| H2 | We are looking forward to positive influence on the willingness of the film industry practitioners to accept and use virtual production. | 0.130 | 0.130 | 0.542 | True |

| H3 | The influence of the community on the acceptance and use of virtual production by the industry practitioners is positive. | 0.067 | 0.069 | 0.430 | True |

| H4 | Convenience conditions have a positive effect on the willingness of people in the film industry to accept and use virtual production. | 0.068 | 0.067 | 0.132 | True |

| Immersion | Interact | Useful | Ease of use | Entertainment | Attitude | Intent | |

| Immersion | 1 | 0.799** | 0.738** |

0.621

** |

0.769** |

0.707

** |

0.623

** |

| Interactivity | 0.798** | 1 | 0.822** |

0.666

** |

0.891** | 0.813** |

0.804

** |

| Usefulness | 0.736** | 0.821** | 1 |

0.715

** |

0.847** | 0.764** |

0.779

** |

| Ease of use | 0.621** | 0.667** | 0.717** | 1 | 0.719** | 0.716** |

0.711

** |

| Entertainment | 0.768** | 0.891** | 0.848** |

0.718

** |

1 | 0.83** |

0.824

** |

| Attitude | 0.486** | 0.499** | 0.459** | 0.475** | 0.497** | 1 | 0.532** |

| Intend | 0.707** | 0.814** | 0.766** | 0.716** | 0.831** | 0.882** | 1 |

In this paper, we will study the correlation between the variables and the virtual production related technology on the audience’s attitude and willingness to use the seven dimensions of digital film and television: immersion, interactivity, usefulness, ease of use, entertainment, attitude, and willingness. The results of the correlation analysis are shown in Table 4.

The correlation coefficients between digital movie and television viewers’ attitudes and each of the other factors are 0.707, 0.813, 0.764, 0.716, 0.830, 0.882, which indicates that the attitudes and each of the other variables affect each other. The data also shows that there is a significant difference between attitude and each of the other variables at the level of 0.01, which indicates that the variables of immersion, interaction, and willingness all have a significant effect on attitude.

The correlation indices between willingness and each of the other factors are 0.623, 0.804, 0.779, 0.711, 0.824, 0.532, respectively, and all the variables of willingness and each of the other variables influence each other.

The correlation indices of each other factor of perceived entertainment are 0.769, 0.891, 0.847, 0.719, and all the variables such as willingness have a significant effect on perceived entertainment.

The correlation coefficients of perceived ease of use with each of the other factors are 0.621, 0.666, and 0.715 respectively. Digital film and television

The correlation values of perceived usefulness with digital movie and television immersion and interactivity are 0.738 and 0.822 respectively, which indicates that there is a positive influence relationship between perceived usefulness and all other variables.

The correlation coefficient between digital movie and television immersion and digital movie and television interactivity is 0.798, the correlation is high, digital movie and television, there is a significant effect between immersion and interactivity.

The relationship between occupational characteristics and the variables is shown in Table 5, which shows that the occupational characteristics of the virtual production film and television staff and the seven dimensional variables are significant at 0.679, 0.85, 0.755, 0.63, 0.515, 0.288, and 0.152, respectively. Suggesting that there is no significant difference in the effect of occupational characteristics on any of these variables.

| Factor | F statistics | Significance |

| Immersion | 0.405 | 0.679 |

| Interactivity | 0.176 | 0.85 |

| Usefulness | 0.296 | 0.755 |

| Ease of use | 0.48 | 0.63 |

| Entertainment | 0.684 | 0.515 |

| Attitude | 0.292 | 0.288 |

| Intent | 0.202 | 0.152 |

This paper introduces the basic related technology of virtual production, and explores the application and expansion of virtual production in the field of digital film and television using structural modeling and correlation analysis, and the results show that:

2010-2018 is the mature period of the development of virtual production, in which there are 53 films with box office over 300 million dollars.

In terms of the influence factors of virtual production film and television, performance expectation occupies the primary position, with a path coefficient of 0.941. Effort expectation occupies the second important position, with a path coefficient of 0.542. Four paths of performance expectation, effort expectation, community influence, and facilitation conditions all hold.

Virtual production is related to games between the seven dimensions of immersion, interactivity, usefulness, ease of use, entertainment, attitude, and willingness, which can be expanded in terms of applications in the field of digital film and television. Occupational characteristics had no significant effect on any of these variables.